April 25, 2020 / Nirav Shah

In this blog we will explain to you how to upload large amounts of data on simple storage service (S3) by AWS.

There are so many tools and services that AWS offers for transferring your on-premise to AWS.

In this blog we will understand the best way to transfer your data using S3 Transfer Acceleration and AWS CLI.

But first let’s understand what multipart upload is?

S3 supports multipart uploads for large files. For example: using this feature, you can break a 5 GB upload into as many as 1024 separate parts and upload each one independently, as long as each part has a size of 5 megabytes (MB) or more. If an upload of a part fails it can be restarted without affecting any of the other parts. Once you have uploaded all the parts you ask S3 to assemble the full object with another call to S3.

Consider the following options for improving the performance of uploads and optimizing multipart uploads:

1) Enable Amazon S3 Transfer Acceleration

Amazon S3 Transfer Acceleration can provide fast and secure transfers over long distances between your client and Amazon S3. Transfer Acceleration uses Amazon CloudFront’s globally distributed edge locations.

Transfer Acceleration has additional charges, so be sure to review pricing.

If you want to see the transfer speeds for your use case, review the Amazon S3 Transfer Acceleration Speed Comparison tool.

How to use

There are so many ways to Enable Transfer Acceleration.so that I will put the link below so you can use it as per your requirement.

Note:- Transfer Acceleration does not support cross-Region copies using

2) Using the AWS CLI

The AWS Command Line Interface (CLI) is a unified tool to manage your AWS services. With just one tool to download and configure, you can control multiple AWS services from the command line and automate them through scripts.

You can install AWS CLI for any major operating system: macOS, Linux, or Windows.

You can customize the following AWS CLI configurations for Amazon S3.

How to use

We have considered the Linux operating system.

$ pip install awscli

Get your access keys

1) Get your access keys

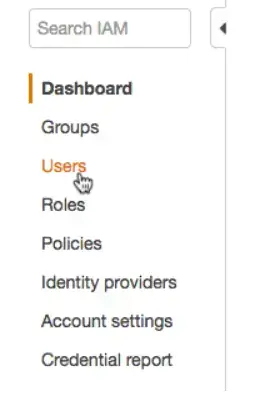

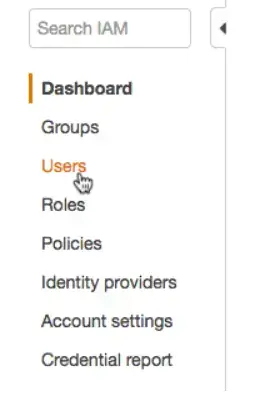

2) Go to Users.

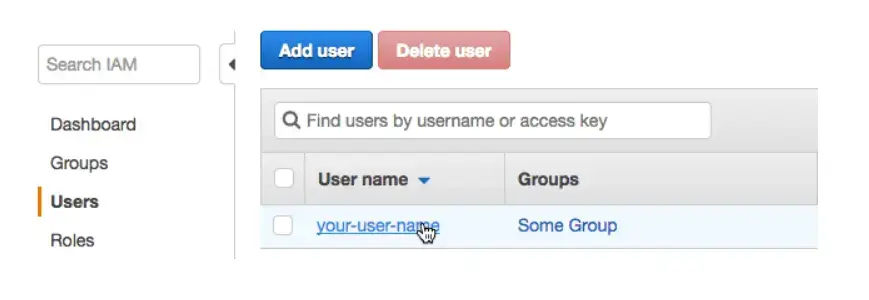

3) Click on your user name

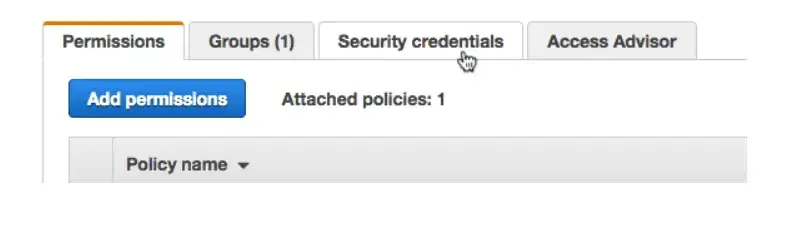

4) Go to the Security credentials tab.

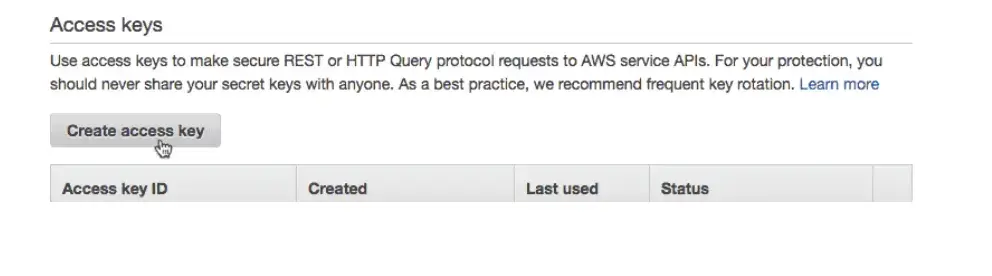

5) Click Create access key

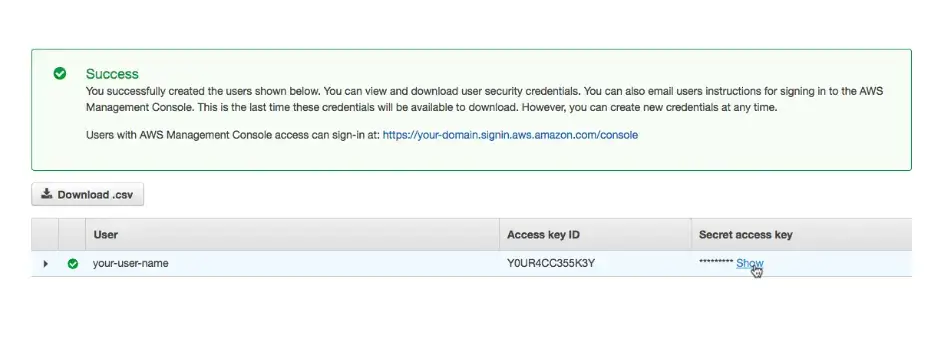

6) You’ll see your Access key ID. Click “Show” to see your Secret access key and download it and keep safe

Once you successfully install the AWS CLI, open the command prompt and execute the below commands.

Uploading large files

Here, assume we are uploading a large 150GB data file to s3://systut-data-test/store_dir/ (that is, directory store-dir under bucket systut-data-test) and the bucket and directory are already created on S3

The command is:

$ aws s3 cp ./150GB.data s3://systut-data-test/store_dir/

After it starts to upload the file, it will print the progress message like

Completed 1 part(s) with … file(s) remaining

at the beginning, and the progress message as follows when it is reaching the end.

Completed 9896 of 9896 part(s) with 1 file(s) remaining

After it successfully uploads the file, it will print a message like

upload: ./150GB.data to s3://systut-data-test/store_dir/150GB.data

But AWS CLI can do much more. Check out the comprehensive documentation at AWS CLI Command Reference.

As a Director of Eternal Web Private Ltd an AWS consulting partner company, Nirav is responsible for its operations. AWS, cloud-computing and digital transformation are some of his favorite topics to talk about. His key focus is to help enterprises adopt technology, to solve their business problem with the right cloud solutions.

Have queries about your project idea or concept? Please drop in your project details to discuss with our AWS Global Cloud Infrastructure service specialists and consultants.